The latest Microsoft DP-900 Azure Data Fundamentals certification actual real practice exam question and answer (Q&A) dumps are available free, which are helpful for you to pass the Microsoft DP-900 Azure Data Fundamentals exam and earn Microsoft DP-900 Azure Data Fundamentals certification.

Table of Contents

- DP-900 Question 11

- Exam Question

- Correct Answer

- Explanation

- DP-900 Question 12

- Exam Question

- Correct Answer

- Explanation

- DP-900 Question 13

- Exam Question

- Correct Answer

- Explanation

- DP-900 Question 14

- Exam Question

- Correct Answer

- Explanation

- DP-900 Question 15

- Exam Question

- Correct Answer

- Explanation

- DP-900 Question 16

- Exam Question

- Correct Answer

- Explanation

- DP-900 Question 17

- Exam Question

- Correct Answer

- Explanation

- DP-900 Question 18

- Exam Question

- Correct Answer

- Explanation

- DP-900 Question 19

- Exam Question

- Correct Answer

- Explanation

- DP-900 Question 20

- Exam Question

- Correct Answer

- Explanation

DP-900 Question 11

Exam Question

What should you use to build a Microsoft Power BI paginated report?

A. Charticulator

B. Power BI Desktop

C. the Power BI service

D. Power BI Report Builder

Correct Answer

D. Power BI Report Builder

Explanation

Power BI Report Builder is the standalone tool for authoring paginated reports for the Power BI service.

DP-900 Question 12

Exam Question

For each of the following statements, select Yes if the statement is true. Otherwise, select No.

Statement 1: Azure Synapse Analytics scales storage and compute independently.

Statement 2: Azure Synapse Analytics can be paused to reduce compute costs.

Statement 3: An Azure Synapse Analytics data warehouse has a fixed storage capacity.

Correct Answer

Statement 1: Azure Synapse Analytics scales storage and compute independently: Yes

Statement 2: Azure Synapse Analytics can be paused to reduce compute costs: Yes

Statement 3: An Azure Synapse Analytics data warehouse has a fixed storage capacity: No

Explanation

Statement 1: Azure Synapse Analytics scales storage and compute independently: Yes

Compute is separate from storage, which enables you to scale compute independently of the data in your system.

Statement 2: Azure Synapse Analytics can be paused to reduce compute costs: Yes

You can use the Azure portal to pause and resume the dedicated SQL pool compute resources.

Pausing the data warehouse pauses compute. If your data warehouse was paused for the entire hour, you will not be charged compute during that hour.

Statement 3: An Azure Synapse Analytics data warehouse has a fixed storage capacity: No

Storage is sold in 1 TB allocations. If you grow beyond 1 TB of storage, your storage account will automatically grow to 2 TBs.

DP-900 Question 13

Exam Question

Match the Azure services to the appropriate requirements. Each service may be used once, more than once, or not at all.

Services:

- Azure Data Factory

- Azure Data Lake Storage

- Azure SQL Database

- Azure Synapse Analytics

Requirements:

- Output data to Parquet format

- Store data that is in Parquet format

- Persist a tabular representation of data that is stored in Parquet format

Correct Answer

Azure Data Factory: Output data to Parquet format

Azure Data Lake Storage: Store data that is in Parquet format

Azure Synapse Analytics: Persist a tabular representation of data that is stored in Parquet format

Explanation

Azure Data Factory: Output data to Parquet format

Azure Data Lake Storage: Store data that is in Parquet format

Azure Data Lake Storage (ADLA) now natively supports Parquet files. ADLA adds a public preview of the native extractor and outputter for the popular Parquet file format

Azure Synapse Analytics: Persist a tabular representation of data that is stored in Parquet format

Use Azure Synapse Analytics Workspaces.

DP-900 Question 14

Exam Question

For each of the following statements, select Yes if the statement is true. Otherwise, select No.

Statement 1: A pipeline is a representation of a data structure within Azure Data Factory.

Statement 2: Azure Data Factory pipelines can execute other pipelines.

Statement 3: A processing step within an Azure Data Factory pipeline is an activity.

Correct Answer

Statement 1: A pipeline is a representation of a data structure within Azure Data Factory: No

Statement 2: Azure Data Factory pipelines can execute other pipelines: Yes

Statement 3: A processing step within an Azure Data Factory pipeline is an activity: Yes

Explanation

Statement 1: A pipeline is a representation of a data structure within Azure Data Factory: No

A pipeline is a logical grouping of activities that together perform a task.

Statement 2: Azure Data Factory pipelines can execute other pipelines: Yes

You can construct pipeline hierarchies with data factory.

Statement 3: A processing step within an Azure Data Factory pipeline is an activity: Yes

A pipeline is a logical grouping of activities that together perform a task.

DP-900 Question 15

Exam Question

To complete the sentence, select the appropriate option in the answer area.

In a data warehousing workload, data __________.

Answer Area:

A. from a single source is distributed to multiple locations

B. from multiple sources is combined in a single location

C. is added to a queue for multiple systems to process

D. is used to train machine learning models

Correct Answer

B. from multiple sources is combined in a single location

Explanation

Note: The data warehouse workload encompasses:

- The entire process of loading data into the warehouse

- Performing data warehouse analysis and reporting

- Managing data in the data warehouse

- Exporting data from the data warehouse

DP-900 Question 16

Exam Question

Match the types of workloads to the appropriate scenarios. Each workload type may be used once, more than once, or not at all.

Workload Types:

- Batch

- Streaming

Scenarios:

- Analyzing historical data containing web traffic collected during the previous year.

- Classifying images that were uploaded last month.

- Tracking in real time how many people are currently using a website.

Correct Answer

Batch: Analyzing historical data containing web traffic collected during the previous year.

Batch: Classifying images that were uploaded last month.

Streaming: Tracking in real time how many people are currently using a website.

Explanation

Batch: Analyzing historical data containing web traffic collected during the previous year.

The batch processing model requires a set of data that is collected over time while the stream processing model requires data to be fed into an analytics tool, often in micro-batches, and in real-time.

The batch Processing model handles a large batch of data while the Stream processing model handles individual records or micro-batches of few records.

In Batch Processing, it processes over all or most of the data but in Stream Processing, it processes over data on a rolling window or most recent record.

Batch: Classifying images that were uploaded last month.

Streaming: Tracking in real time how many people are currently using a website.

DP-900 Question 17

Exam Question

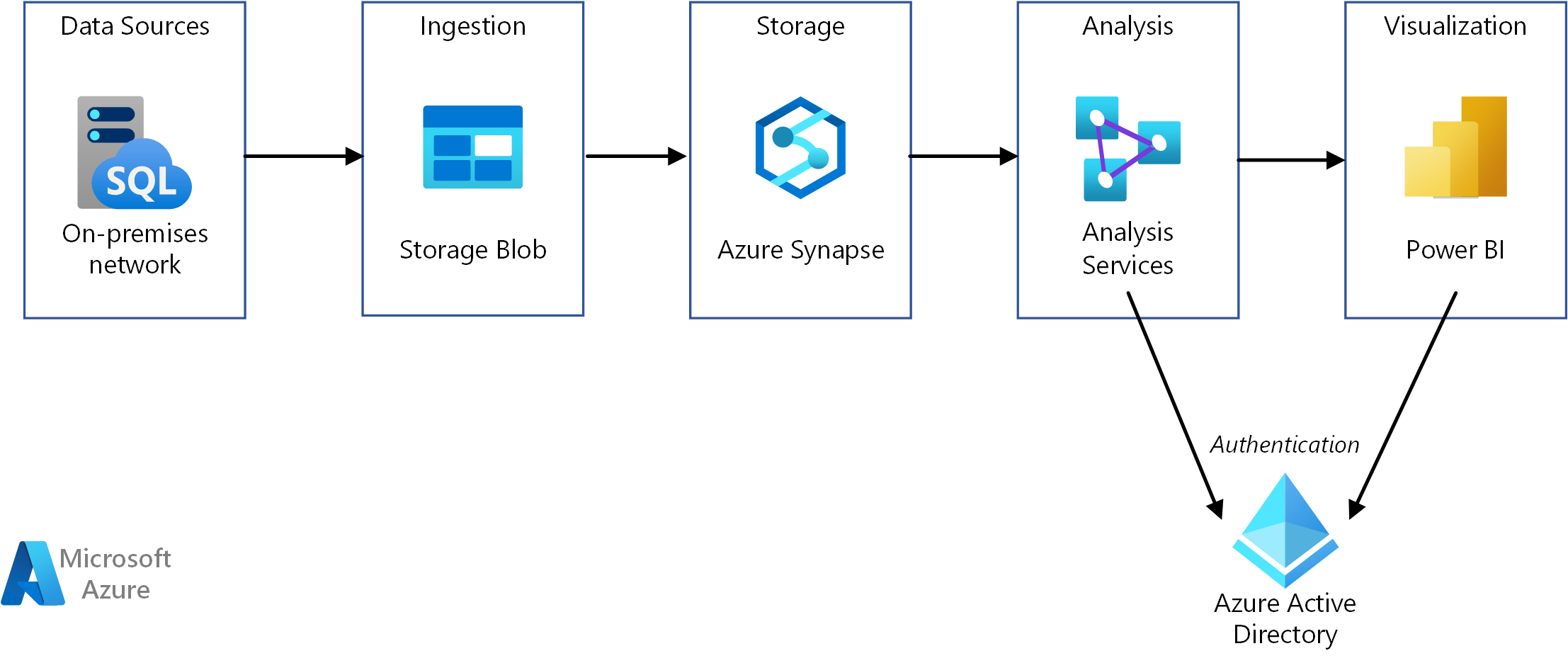

Match the Azure services to the appropriate locations in the architecture. Each service may be used once, more than once, or not at all.

Correct Answer

Explanation

Box Ingest: Azure Data Factory

You can build a data ingestion pipeline with Azure Data Factory (ADF).

Box Preprocess & model: Azure Synapse Analytics

Use Azure Synapse Analytics to preprocess data and deploy machine learning models.

DP-900 Question 18

Exam Question

What is the primary purpose of a data warehouse?

A. to provide answers to complex queries that rely on data from multiple sources

B. to provide transformation services between source and target data stores

C. to provide read-only storage of relational and non-relational historical data

D. to provide storage for transactional line-of-business (LOB) applications

Correct Answer

C. to provide read-only storage of relational and non-relational historical data

Explanation

Consider using a data warehouse when you need to keep historical data separate from the source transaction systems for performance reasons. Data warehouses make it easy to access historical data from multiple locations, by providing a centralized location using common formats, keys, and data models.

Query both relational and nonrelational data.

Incorrect Answers:

D: Data warehouses don’t need to follow the same terse data structure you may be using in your OLTP databases.

DP-900 Question 19

Exam Question

What are three characteristics of an Online Transaction Processing (OLTP) workload?

A. denormalized data

B. heavy writes and moderate reads

C. light writes and heavy reads

D. schema defined in a database

E. schema defined when reading unstructured data from a database

F. normalized data

Correct Answer

B. heavy writes and moderate reads

D. schema defined in a database

F. normalized data

Explanation

B: Transactional data tends to be heavy writes, moderate reads.

D: Typical traits of transactional data include: schema on write, strongly enforced. The schema is defined in a database.

F: Transactional data tends to be highly normalized.

DP-900 Question 20

Exam Question

For each of the following statements, select Yes if the statement is true. Otherwise, select No.

Statement 1: You can copy a dashboard between Microsoft Power BI workspaces.

Statement 2: A Microsoft Power BI dashboard can only display visualizations from a single dataset.

Statement 3: A Microsoft Power BI dashboard can display visualizations from a Microsoft Excel workbook.

Correct Answer

Statement 1: You can copy a dashboard between Microsoft Power BI workspaces: No

Statement 2: A Microsoft Power BI dashboard can only display visualizations from a single dataset: No

Statement 3: A Microsoft Power BI dashboard can display visualizations from a Microsoft Excel workbook: Yes

Explanation

Statement 1: You can copy a dashboard between Microsoft Power BI workspaces: No

You can duplicate a dashboard. The duplicate ends up in the same Power BI workspace.

There is no current functionality that allows you to move reports from one workspace to another.

Statement 2: A Microsoft Power BI dashboard can only display visualizations from a single dataset: No

Statement 3: A Microsoft Power BI dashboard can display visualizations from a Microsoft Excel workbook: Yes